CrowdStrike (3 Weeks Later)

First, who is CrowdStrike?

Okay, now we have a better idea of CrowdStrike and what it does (and for who). But how does it detect and protect and all that fun stuff? In short, their products work at the operating system (OS) level. An OS is “system software that manages computer hardware and software resources, and provides common services for computer programs”. In other words, the OS is the wizard behind the curtain for any type of computing device. It works behind the scenes to conduct operations that let your device run an app, play a video, or anything else that you can think of. To monitor your device properly, CrowdStrike’s products basically get VIP access to your OS and all of its processes, programs, data, and (insert other sensitive things here). With this access, CrowdStrike stays on the lookout for anything that might be deemed suspicious, dangerous, or generally speaking, bad. The software is constantly being updated with new vulnerabilities, threats, and relevant data found by CrowdStrike’s researchers to spot patterns of malicious activity (keep this in mind, it will be important later).

The Incident (and the report)

Anyway, here’s a timeline to give you an idea of the events that took place:

- On July 19, 2024, at 04:09 UTC (bed time as far as eastern time zone folks are concerned), an update was pushed to a part of the CrowdStrike platform, the Falcon sensor for Windows. According to CrowdStrike, the specific push “released a sensor configuration update to Windows systems.”

- This release was meant to add changes to “Channel Files” (config files). CrowdStrike’s early report mentions that “updates to Channel Files are a normal part of the sensor’s operation and occur several times a day in response to novel tactics, techniques, and procedures discovered by CrowdStrike”.

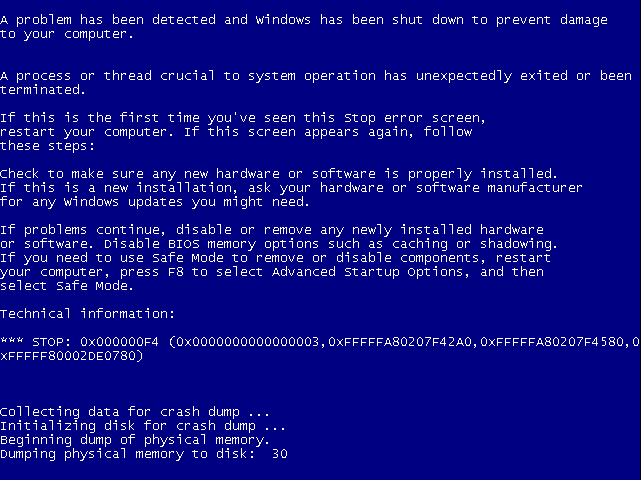

- However, immediately following this release, any device running Windows 7.11 or above experienced a system crash and a “Blue Screen of Death” (aka “BSOD”). This screen is a symptom of the fact that the device was unable to start (uh this is not so good).

- In a mad scramble, CrowdStrike released a patch within a couple hours, however devices with certain security requirements and device configurations required manual implementation of the fix (as you may have guessed, this is also not good).

and here’s some of the key points to take from the root cause report that CrowdStrike recently released:

- The core issue is actually several interconnected problems: as some of the discussion around the event noted, at a code level, this issue came from an out-of-bounds memory read. However, there’s a bit more here to take a look at.

- At a high-level, CrowdStrike’s detection abilities come from comparing data from the system it is sitting on and data from external sources (i.e the cloud) in order to find potential hostile behavior, indicators of an attack, etc. In their own words, this process leads to their “Sensor Detection Engine combining built-in Sensor Content with Rapid Response Content delivered from the cloud. Rapid Response Content is used to gather telemetry, identify indicators of adversary behavior, and augment novel detections and preventions on the sensor without requiring sensor code changes”

- This content is delivered through configuration files with certain templates (referred to as “Channel Files”) and is interpreted using a regular-expression engine. Each config file has a specific “Template Type” built into each product release. Here’s where the next issue kicks in: their releases in 2024 included a new “Template Type” that defined 21 input parameter fields, but the part of their engine that integrates these different components only supplied 20 input values to match against. Now, this might not seem like much, but this mismatch creates the pieces needed for the out-of-bounds memory read error we mentioned at the beginning. These conditions made it through many layers of validation, testing, and internal processes, where it became, in essence, a ticking time bomb.

- The next problem comes here at the validation and testing stage. Why was this bomb not detected? Well, CrowdStrike did the right thing in having validation…but the mistake came in how the validation was set up. Their testing used “wildcard matching criteria for the 21st input”…which in English, basically means that the 21st input field was treated as "anything goes here" or "this field is optional." So, as far as their validation was concerned, there was no mismatch because essentially, it would accept anything (or nothing) for that last input.

- So, at this point, we have this mismatch in parameters, which sets up a vulnerability in the system, that made it past validation and testing, and is now sitting live in production. What made the bomb go off? In CrowdStrike’s report, they note, “at the next IPC notification from the operating system, the new IPC Template Instances were evaluated, specifying a comparison against the 21st input value. The Content Interpreter expected only 20 values. Therefore, the attempt to access the 21st value produced an out-of-bounds memory read beyond the end of the input data array and resulted in a system crash.”

- This system crash prevented Windows machines from booting up, leading to the final Blue Screen of Death which most of the general public saw at (the airport, bank, F1 race, etc).

Insights

- The dominance of Windows as an OS: As mentioned earlier, Windows dominates well over half the global desktop OS market share (~70%) and continues to be the standard OS in every enterprise except tech startups and related firms. Inherited from Microsoft’s early history as the leaders in the PC sector, this overwhelming market dominance creates a common potential point of failure for IT systems worldwide. I won’t go into the full history, but years ago, Windows was (sort of) the only OS that a company could use. While that changed over time (hello open-source), this early lead has carried forward into the modern era, with Microsoft being the obvious technology vendor for most organizations. These days, the famous saying “Nobody ever got fired for buying IBM” would probably be more accurate if it read “Nobody ever got fired for buying Microsoft”. This historical wide-spread adoption now means that an issue with Windows and their ecosystem becomes an issue that impacts 8.5M machines worldwide (according to the Microsoft’s estimate). How do you address this dynamic? While we don’t have a silver bullet solution (Sitebolts is a technology firm, not a policy maker), there are some interesting points we want to highlight regarding market dynamics and vendor dominance:

- For some, the immediate thought here is antitrust action or some sort of regulation regarding monopilistic practices. However, perhaps counterintuitively, there is an argument to be made that EU regulation is what led us to this moment! As the WSJ reported, “A Microsoft spokesman said it cannot legally wall off its operating system in the same way Apple does because of an understanding it reached with the European Commission following a complaint. In 2009, Microsoft agreed it would give makers of security software the same level of access to Windows that Microsoft gets”. Basically, a while ago, the EU argued that if Microsoft was running products with Windows kernel access, they should also allow other, non Microsoft, products to run with kernel access, in order to avoid a scenario where the “market could be threatened if Microsoft doesn’t allow security vendors a fair chance of competing.”

- What are the alternatives? Well, outside of regulation, consumers and businesses should resist the temptation to go with the path of least resistance (“just buy Microsoft”) and include market dynamics as part of their risk assessment when procuring technology products from vendors. As a related note, the reason that Mac and Linux were not affected was that they handle kernel access different than Microsoft, which is also why everyone at shops that run those systems (including Sitebolts) experienced a much calmer month.

- CrowdStrike’s previous incident history: while not nearly as large of an outage as the focus of this piece, it is really interesting to note that CrowdStrike had a similar incident affecting devices running a version of Linux (Debian to be specific). A comment on HackerNews by one of the affected devs paints a picture that suggests CrowdStrike might have benefited from a bit more….urgency in their recovery processes. Here’s the full story:

“Crowdstrike did this to our production linux fleet back on April 19th, and I've been dying to rant about it.

The short version was: we're a civic tech lab, so we have a bunch of different production websites made at different times on different infrastructure. We run Crowdstrike provided by our enterprise. Crowdstrike pushed an update on a Friday evening that was incompatible with up-to-date Debian stable. So we patched Debian as usual, everything was fine for a week, and then all of our servers across multiple websites and cloud hosts simultaneously hard crashed and refused to boot.

When we connected one of the disks to a new machine and checked the logs, Crowdstrike looked like a culprit, so we manually deleted it, the machine booted, tried reinstalling it and the machine immediately crashes again. OK, let's file a support ticket and get an engineer on the line.

Crowdstrike took a day to respond, and then asked for a bunch more proof (beyond the above) that it was their fault. They acknowledged the bug a day later, and weeks later had a root cause analysis that they didn't cover our scenario (Debian stable running version n-1, I think, which is a supported configuration) in their test matrix. In our own post mortem there was no real ability to prevent the same thing from happening again -- "we push software to your machines any time we want, whether or not it's urgent, without testing it" seems to be core to the model, particularly if you're a small IT part of a large enterprise. What they're selling to the enterprise is exactly that they'll do that.”

- This definitely doesn’t come close to the impact that wider Windows incident had, but it does share some characteristics that speak to a potential larger issue at CrowdStrike. Some of the characteristic that stick out from this anecdote include a similar push to production with an apparent lack of thorough testing or even a phased roll out and a severe lack of urgency in their communications with those affected

- Fool me once, shame on you. Fool me twice, shame on….well all of us. I don’t have any special insights into CrowdStrike’s internal decision making or culture, but as I noted in the introduction, they have grown at dizzying speeds and scaled massively. This is usually framed as a positive, but I think it bears noting that typically, in organizations that prioritize growth, certain things get pushed to the side (risk management, etc). On a totally unrelated note, CrowdStrike did also lay off people in the QA department recently. Given this pattern of incidents, it might be fair to question if this growth mentality has led to a culture of “move fast and break things” that is well, uh maybe breaking things (say, the wider global IT community)

Lessons and Conclusion

Whether you are a technical or business leader, recent events probably instilled deep concern and uncertainty regarding technical outages. To help with this increased uncertainty, here are some actionable lessons and insights to consider:

- No system is an island: Modern software is an increasingly complex wave of interlocking parts, integrated solutions, and 3rd party dependencies. We are all interconnected between the different libraries, packages, and APIs that hold up our systems. This means someone’s sloppy internal processes around software updates can turn into your nightmare. IT teams should create a comprehensive map of their enterprise architecture and identify potential points of failures where their systems interact with external ones, especially ones with privileged access. This includes understanding the nature of updates at these intersections. Does your vendor do silent updates? Can you choose when to update? Is it automatic? Knowing the answers to these questions can be the difference between a gracefully handled change or weeks of down time (aka loss of business)

- “Build, buy, or partner” can be a life or death decision: Most technologists and IT folks are constantly playing the game, “should I build this myself, buy it from someone else, or partner with someone to get it done?” This game might not seem like a critical business moment when you’re choosing between CRM plugins or API providers, but as this incident has shown, someone choosing CrowdStrike over a competitor or the native Windows EDR service was the difference between an organization having a normal day or bleeding millions. It’s impossible to treat every decision as a life or death call, but leaders (both technical and nontechnical) would do well to place more weight on moments that require setting down foundations for “load bearing systems” (hint: if you use computers for anything in your business, you will have many of these moments). After all, every complex system starts as a simple one that transforms a little more after each subsequent change. It is crucial to have people making decisions with these dimensions of risk in mind, even from Day 1.

- The “blame game” has no winners: It is very tempting in situations like these to start pointing fingers, but as stated earlier, this is no way the fault of an individual person or even a specific development team. When it comes to complex technology platforms and products, it is expected that a company’s leadership has created and established robust systems that put every possible change through rigorous testing and release processes so that no single push can bring down the entire platform. With this idea in mind, it’s crucial that organizations avoid blaming individuals and instead focus on identifying the systemic issues and gaps in processes that can lead to a single mistake cascading into a production wide release. Blaming your developers only leads to the wrong lessons being learned and further, larger incidents in the future.

From our perspective, this CrowdStrike incident highlights the complexity and interconnectedness of modern IT systems and the importance of transparency, communication, and rigorous testing processes to minimize the impact of potential changes or vulnerabilities. Whether you are a CEO, IT Director, a middle manager, or just someone with a company-provided device, this should serve as a valuable learning opportunity and highlight the importance of areas of the business that may get taken for granted.

Further Reading & Credits

Since the incident, there have been a lot of sharp and insightful pieces that I used as both references and inspiration, as well as some technical reports from CrowdStrike and Microsoft. Full credit to them:

- Channel File 291 - Incident Root Cause Analysis (08.06.2024)

- Falcon Update for Windows Hosts: Technical Details

- Helping Our Customers Through the CrowdStrike Outage

- ELI5: The CrowdStrike Outage

- The Biggest Ever Global Outage: Lessons

- An Expert's Overview of the CrowdStrike Outage

- OS Market Share - Desktop (Worldwide)

- OS Market Share